A beginner’s guide to wholesale market modelling

Context – where we left off

Last time we spoke about the use of wholesale market models to answer a wide variety of questions across the energy sector. We described the concepts of linear programming, solvers, and even how we derive prices from these tools. We then explained the first step in the modelling process – capacity expansion modelling, which allows us to determine the least-cost combination of generation technologies to meet demand.

But there were some aspects missing from our capacity expansion model, because of the computational complexity of including them. We concluded by foreshadowing the next step in the standard modelling framework, ie, the simulation of real-time dispatch – the subject of this second article.

Part (2) Operational dispatch modelling – creating a more granular picture

Having built the arena, the next step is to watch the operation of the system play out. There are three specific factors that we wish to capture in more detail in this process:

- Bids from participants.

- Detailed operational plant limitations, such as ramp rates, minimum-stable levels and outages.

- Variation in renewable energy and demand traces.

We note that there is no reason that a capacity expansion model could not have captured each of these factors, save for the computational burden of doing so. When we have limited time and resources, it makes sense to ‘lock the build’, and examine these other factors in more detail.

The process is to run a time-sequential simulation model, which takes as an input the technologies and capacities from the capacity expansion model. This is a far simpler problem, which can be run many times over with different inputs for demand, renewable energy traces, fuel costs, and any other parameters of interest.

In the remainder of this article, we will examine each of the three factors described above, and how they are handled in the dispatch modelling.

Choose your poison – bidding assumptions

Let us start with the most important, most controversial, of all assumptions: bids from technologies. So often when we try and explain a strange phenomenon in the market, the answer comes back: bidding behaviour. An unexpected price spike to the market price cap – bidding behaviour; counter-price flows on an interconnector – bidding behaviour; high prices for sustained periods on a mild day – bidding behaviour. Indeed, if one is at a loss for explaining a phenomenon, the best bet to avoid embarrassment is to give a sagely shake of the head and appeal to the higher power: ‘bidding behaviour’.

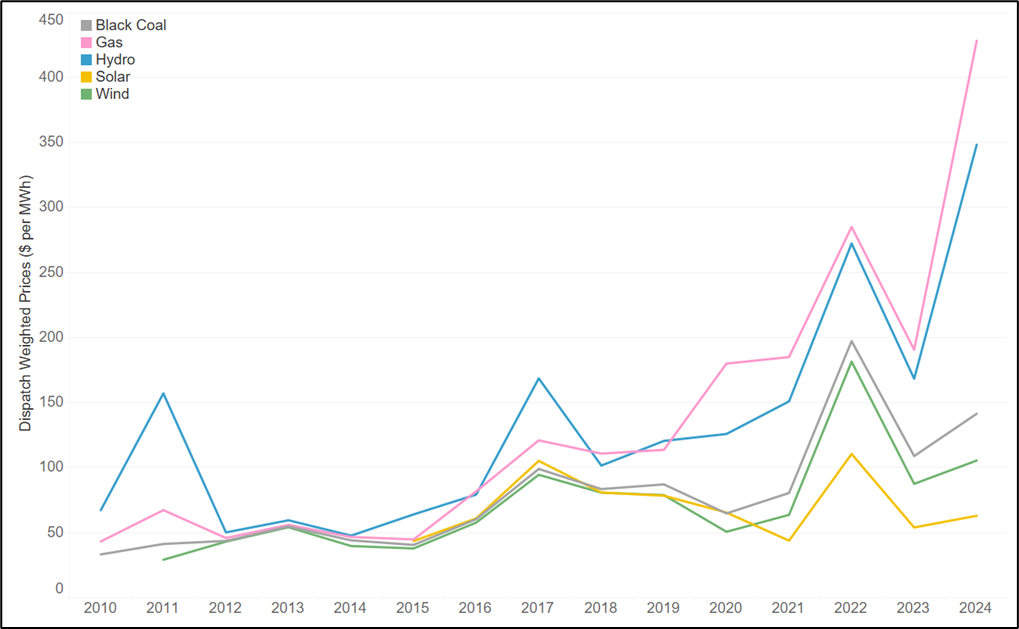

Bids are of such great importance because they collectively give rise to the supply curve that, together with demand, is responsible for price formation. And because demand is highly inelastic – ie, it does not respond to price – it is the supply curve that is responsible for a many of the phenomena in the sector that are otherwise inexplicable.

But what bidding assumptions should we use? Bids can vary from day to day, hour to hour and, even in some cases, minute to minute because of rebidding. Although it is entirely possible to reconstruct outcomes given historical bids, making projections about future bidding behaviour is far more complex. This is particularly the case in an environment where the technology capacity mix is changing, eg, when new plants are rapidly entering the system, or older plants are retiring.

The Holy Grail of bidding assumptions is some mechanism for determining how plants will bid in their capacity in any future world, whether that world be defined by:

- a high penetration of low-cost renewables,

- an aging and less reliable thermal fleet,

- an increasingly interconnected system, or

- any combination of the above.

Despite many claims to the contrary, no such mechanism exists. Yes, it is possible to create bids based on rules, or game-theoretic frameworks, but in the end they all result in the same outcome – players will bid some proportion (potentially none) of their capacity at a level that exceeds their short-run marginal cost. Some typical assumptions are as follows.

Approach 1: Contract bidding:

Players will bid in their contracted level of output at SRMC, but will then bid all remaining output with some mark-ups. A problem with this outcome is that we must assume a contract level. How contracting changes with changes in market conditions and the change of asset ownership will be difficult to forecast for every plant into the future, and so requires us to make assumptions. In effect, we are still assuming a supply curve.

Approach 2: Game theoretic bidding:

Players are assumed to bid based on the assumption of maximising their profit, subject to the strategies of other players. The assumption is that by iterating between players and giving them opportunities to change their bids, we will converge to a Nash-equilibrium (ie, a world where nobody has a reason to change their bids unilaterally). This is not mathematically correct – there is no assurance of convergence to a single Nash equilibrium given the way the supply curve is represented – and it drastically increases the computational overhead of the exercise, slowing down run times and forcing the modeller to make simplifications in other parts of the model. Moreover, the assumption that generators seek outcomes that are Nash is elegant but unrealistic. As one of my old colleagues was fond of saying: ‘I’ve never seen a rebid reason that says ‘Seeking Nash Equilibrium’.

Approach 3: Using historical, or other assumed profile of, bids:

In this case, Players are assumed to bid their capacity in at levels based on recent outcomes. This approach suffers from the weakness that the bids are once again being driven by the world we know and understand, and may not align with future changes in contracting behaviour, portfolio changes, or other developments of the system.

There seem to be no good solutions – one must choose their poison. At one time or another, we have used each of the above methods depending on the task at hand. But in general we have found that the approaches that limit the computational complexity (ie, Approaches 1 and 3) are more favourable, because they allow us to investigate different sensitivities to the supply curve. In addition, using historical information tends to provide a helpful reference point for any such discussion. For example, we can ask the question of ‘what happens if more generation is bid in at the market price cap than historically’, or ‘what if batteries start to bid more generation at a lower bid band’.

Regardless, there is no way to avoid the challenge that at its core we are making assumptions about future behaviours and that as the power system changes, the current information set we have will become more out of date.

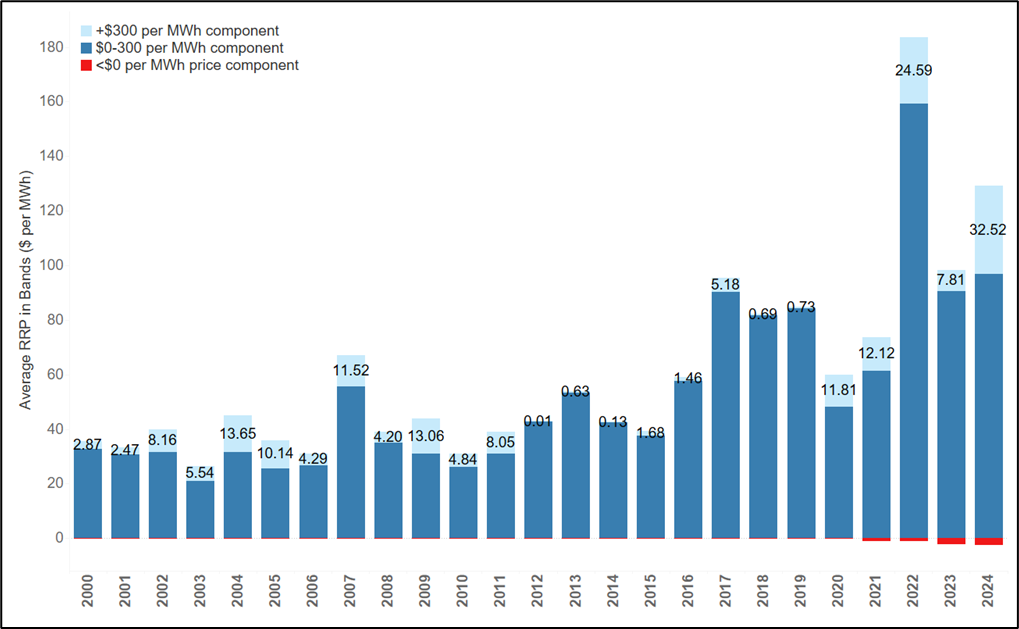

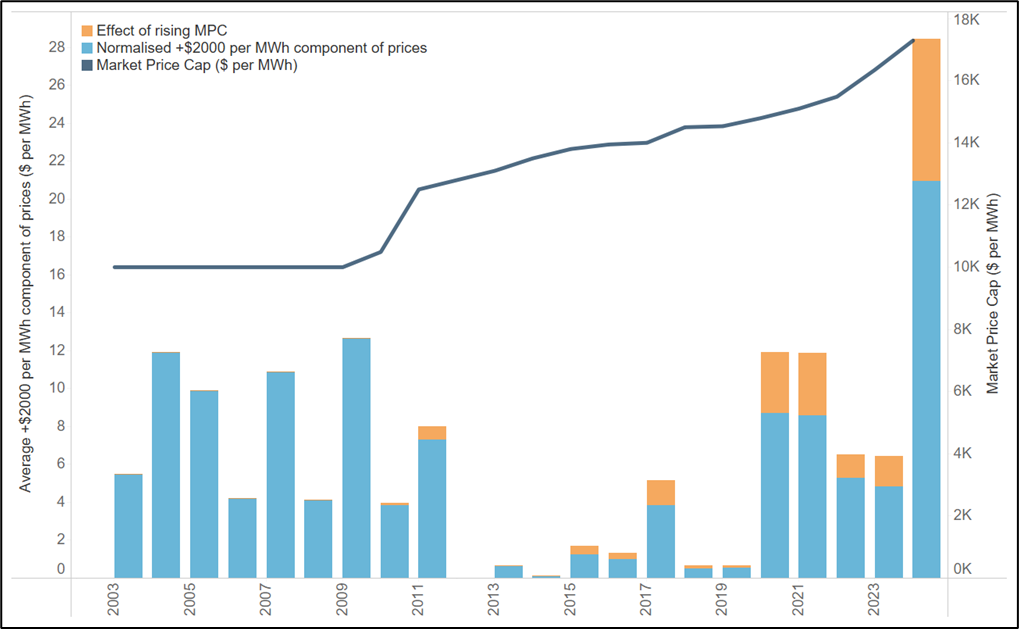

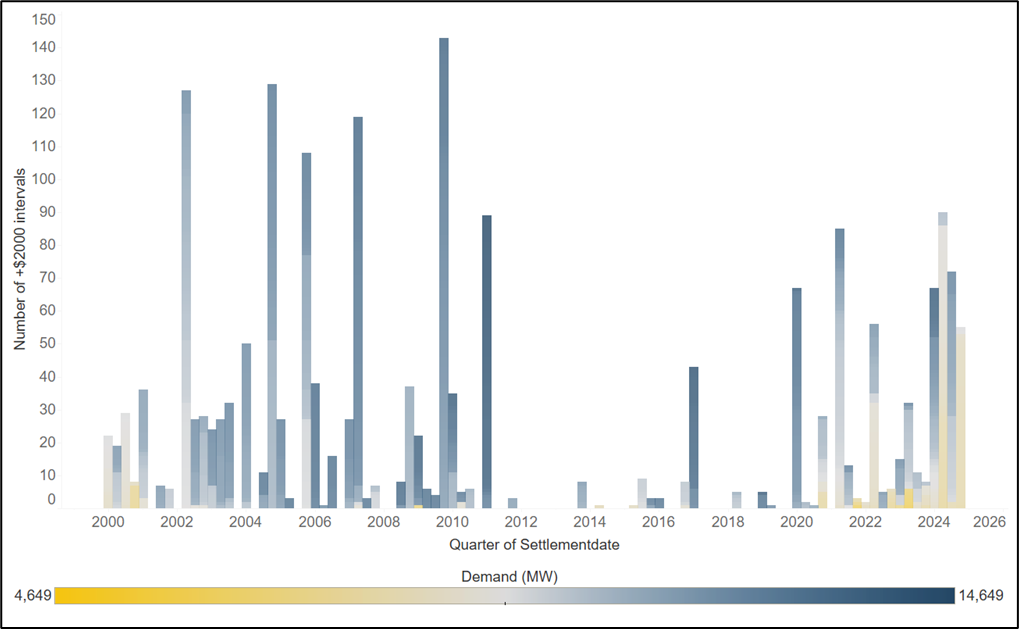

In addition, we recognise that all existing models are poor at capturing the type of volatility (ie, instances of super-high prices well in excess of the $300 – $500 range) that are so important to market outcomes. This is because volatility occurs in the actual market due to unexpected transient factors such as system constraints (ie, temporary local FCAS constraints due to the risk of islanding), occasional bidding behaviours that are often quickly outcompeted by competitor responses, and unexpected major events such as an explosion at a major unit or lightning strike on a transmission line. Put simply, the reason why super high prices are difficult to forecast is not because the market model is not “good enough” (eg, 30-minute vs 5-minute resolution) but because the modeller cannot systematically forecast transient market disturbances. Where these super high price volatilities are included, they typically occur through post-processing of results, such as adding some historical “noise” component – it is not an outworking of the model. This is a clear limitation of market modelling. Our advice is to be aware of this limitation and be suspicious of anyone who tells you they have a model that can forecast this type of volatility.

High fidelity modelling – capturing detailed operational plant limitations and outages

One of the benefits of ‘locking the build’ is that we can simultaneously unlock large amounts of computational power to capture other factors. This can come in the form of more runs of the model (see next section), or in the form of increased fidelity of representation of the system.

There are three, and potentially many more, ways that this computational windfall is spent. The first is the inclusion of operational plant limitations, such as ramp rate and minimum stable level constraints. Historically ramp rates were generally of limited importance because of the relatively small amounts of ramping required across the system. But with the advent of renewables – in particular solar – minimum stable levels in the middle of the day and ramping on either side of the morning and evening peaks have become more and more important. Dispatch models can easily capture the inter-temporal restrictions on generation caused by limited plant flexibility, and so the benefits of fast-ramping technologies are more evident.

The second change in the dispatch modelling is the use of outages. Now here we face a conundrum: how is one best placed to capture the effect of outages, given that they are a random variable. In some studies, such as reliability modelling, we are not just interested in one realisation of outages, but in the distribution of outcomes across many potential different outage traces. Such modelling often involves rerunning the same model hundreds or even thousands of times to build a picture of the distribution of unserved energy. Here again we see the benefits of the dispatch model being simpler and faster – we can spend the computational windfall on running many different simulations, rather than just one.

But what if we are restricted to just one simulation? It would seem that in this world, we need some concept of a ‘normal’ outage pattern. This is indeed the approach that is taken by most modellers. For example, some modellers derate all capacity uniformly over the course of the year. However, this averaging approach tends to crimp volatility further, because it does not capture the extreme events which occur when outages are greater than their long-term average. We have typically adopted the approach of examining many different outage profiles and selecting the median profile according to a metric of the frequency of extreme events. Regardless of the approach adopted, it is important to understand the degree to which outages are affecting outcomes, because a single sustained outage at the wrong time can lead to a massive impact on reliability.

These factors tend to provide more granular results, because they impose additional constraints. All else being equal:

- Operational plant limitations tighten the ability of the plant to respond to system fluctuations, so they increase the daily price spread.

- The inclusion of outages removes generation from the supply curve, so it also acts to lift price.

- More generally, any factor that adds constraints to the system will tend to lift price, whether that constraint be in the form of interconnector losses, complex heat rate equations or even cycle limits on batteries.

When all is said and done, these many different factors can give a great deal more shape to prices, as well as leading to different marginal costs or prices being observed in the system.

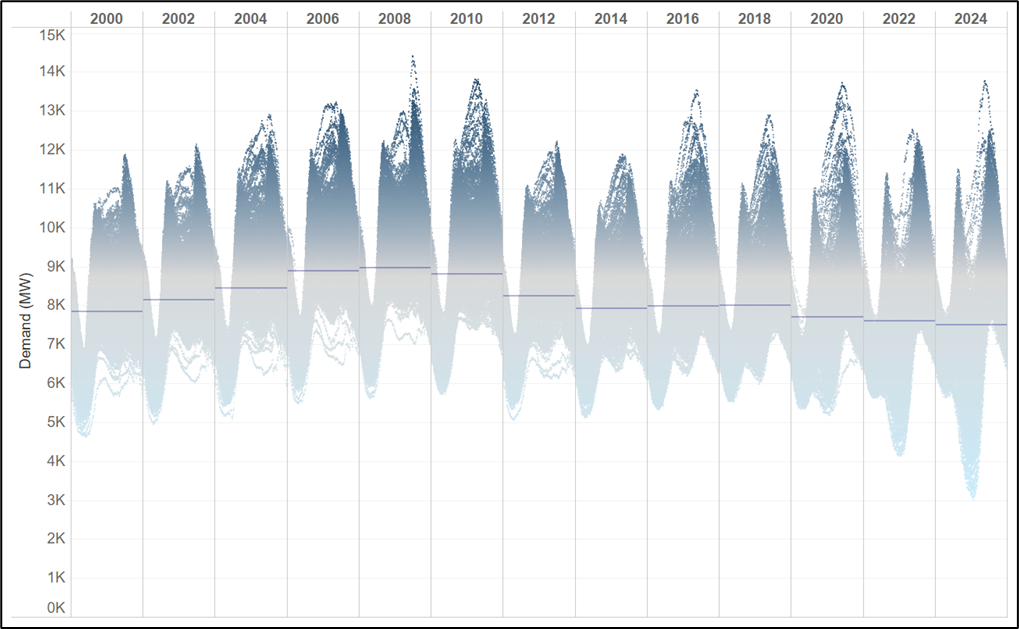

Diving into distributions – variation in renewable energy and demand traces

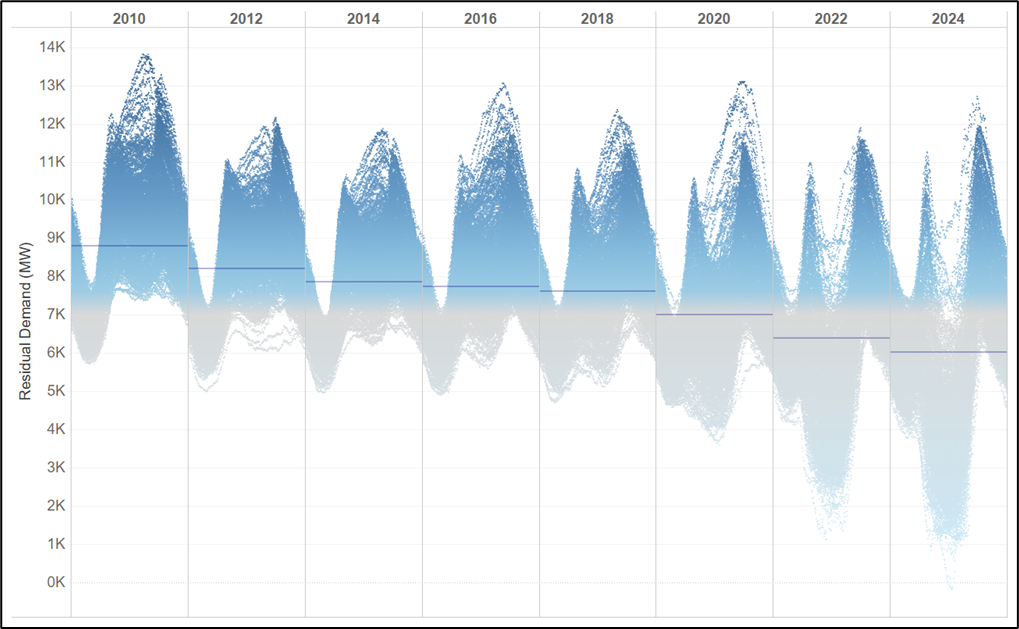

We have described using the computational windfall from locking the build that comes out of the capacity expansion model to increase the complexity of dispatch. But another way to spend that windfall is by running our dispatch models many times. This is particularly important when we want to understand how random factors influence outcomes.

We can look at many different potential realisations of a random variable, to understand not just a single point estimate of outcomes, but an entire distribution. This can help us answer questions like:

- Can our power system withstand extreme demand events?

- How might different weather conditions (ie, temperature, wind and solar irradiance) lead to different outcomes, and how different are those outcomes from one another?

The key here is to create the inputs – ie, the weather and demand traces – that will feed into these simulations. In the National Electricity Market, the market operator publishes a range of traces for demand and weather going back 13 years. But we can go further using historical data sets such as the MERRA-2 data set to create a longer history. The challenge is always to ensure that the weather and demand conditions are correlated. For example, it would be a mistake to use temperature from 2011, but wind data from 2022. The two would be misaligned with the potential for outcomes like high output from wind farms occurring at the same time as high temperature outcomes in summer. In general, the solution here is to ensure that all the trace variables are aligned, and so it is not possible to ‘mix and match’ traces without compromising the value of the exercise.

In the event that a model requires even more data than is historically available, the solution is to create synthetic data, which preserves the relationships between the variables, but which is generated using probabilistic machine learning or some other suitable technique. For more information about this type of approach, we refer the reader to Probabilistic Deep Learning by Oliver Dürr and Beate Sick.

Once we have run the model across all the available data, we can look at the distribution of outcomes and see how much additional information has been revealed. In our opinion, this type of ‘stress testing’ is massively underapplied across the sector. And even when it is applied, for example in reliability studies, not enough analysis occurs of the distribution of outcomes. As more and more data sets become available, and the system gets more and more dependent on random factors, this type of approach will become increasingly powerful.

Where to from here?

So we now have an end-to-end modelling process. But even after two articles, we have barely scratched the surface of the process. The power system is a complex beast, and a model that seeks to simulate its operation will be similarly intricate.

This intricacy can sometimes lead modellers to avoid talking about the fine details of their modelling and, in some instances, to use modelling to justify poor decisions.

With this in mind, we think that the most helpful tool for someone trying to engage with, or commission, energy market modelling is a guide to some of these tricks. In our final instalment of this series, we therefore examine the assumptions and methods – the Dark Arts – that your modeller would rather not talk about. Our intention in doing so is to arm you with the basics of ‘Defence against the dark arts’. This is the information you need to understand both what models cannot do, but more importantly the many powerful questions that can indeed be answered by modelling if we are able to stretch our understanding.